Sorry, the best humorous line I could think of on short notice.

I’m working on developing an application for managing requirements and RFPs/RFIs for LIMS acquisition projects, and as part of that, I was asked to take a look at appFlower and use that to build the app. In the interest of documenting my findings with regard to appFlower, I figured it would be most useful to blog about things as I go along.

However, to begin, let me provide a little background as to what appFlower is. appFlower is a somewhat unique beast in that it is an application development framework built on… another application development framework. This other application framework is Symfony, a php framework somewhat akin to Rails for the Ruby development crowd. In the interest of full disclosure, I should reveal that I’m well acquainted with Rails, so forgive me if that colors any of this commentary.

As far as I can tell, there were a couple of goals behind the creation of appFlower. One was to shift from the extensive use of yaml to manage configuration in Symfony to the use of xml, which one can argue is the more broadly used standard. The second goal, I believe, was to provide a rich UI for creating the various forms, etc., that you might desire in a basic web application. Therefore, you can build some basic lists and data entry forms with a fairly nice UI, and the ability to add and edit records with effectively no programming. That in itself is worthy of a couple of gold stars.

One thing that it is important to realize when discussing rapid application development (RAD) frameworks is that they fail immediately on one key element of their basic sales pitch, “Build web apps in minutes”, or my favorite from the appFlower site, “Why write code that can be created automatically? Skip learning SQL, CSS, HTML, XML, XSLT, JavaScript, and other common programming languages (Java, Ruby and .Net)” This latter statement besides being incorrect, is also an example of the sort of verbal sleight of hand most commonly seen in political advertising. One might note when reading the above statement that they don’t mention PHP, which is at the heart of appFlower. You’d better know PHP if you want to do anything really useful here. You will also need to know CSS if you want the app to look any different that what you get out of the box, and for sure you will want to be able to deal with XML. You certainly won’t need to know Java, Ruby, or .Net, as those aren’t the languages of this framework (a somewhat disingenuous statement). I expect I’ll be writing javascript along the way as well. Oh, and you may not need to know SQL (we’ll see about that), but you will need to understand basic RDBMS concepts.

All application frameworks, and appFlower is simply no different, are intended to help seasoned developers move along more quickly by building the basic pieces and parts of a web application automatically. All that is left is adding in the additional functionality you need for your specific requirements. By building the framework, the learning curve is simply reduced for everyone. At the same time, there is a feature of these frameworks that can be frustrating. Frequently you will find that if you simply put a URL in with a keyword like edit, that the app just knows what you intended and you get the desired result. For instance, in appFlower, if you create an action and associate it with an edit widget, the app figures out from the context (if the action is associated with row of data it knows you want to edit, and if it isn’t it know you want to create a new row of data) what to do. However, you’ll never know when the app will or will not be able to figure out what you want until you try and get an error message. Which, by the way, is important safety tip #1, turn on debugging. Otherwise you will never have any idea of what is happening. Even at that, the messages may not be that helpful. Like my favorite, “Invalid input parameter 1, string expected, string given!” How silly of me to give the app what it expected.

As I was building a simple screen to add categories to assign to requirements, I wanted to add a delete button. So I looked for an example, and the only example exists in a canned plugin. In other words, I couldn’t find, even in the walkthrough for creating an app, the simple process of deleting a piece of data. Perhaps the appFlower team doesn’t think you should ever delete data. Typically, when you assign a URL to an action they tell you to just specify the filename for the widget you just created (these files are typically xml files). However, in the case of deleting, there is no widget, even in the plugin. So, I thought maybe the app simply knows if I say delete (like with the plugin), but that didn’t work. deleteCategory? nope. Finally I was able to dig through the code to find the right action class php file for the plugin, and was able to determine where the appropriate file existed that I needed to edit to create the action. Then I used the model code in the plugin to create the code I needed. In php. In other words, this is not some magical system like Access, or Filemaker, or 4D that allows you to quickly bring up a fully functioning app with no coding. You will need to code.

This takes me to the next issue, which is support. Support appears to primarily exist as a couple of guys who run appFlower who occasionally answer questions. Perhaps if I wasn’t using the free VM that I could download, it would be different. Paid support usually gets a better response. I’ve got a number of problems and questions, including the one about how to delete a row of data. Three days in and no response. Other questions/problems have been sitting in the queue for much longer. Don’t plan on a lot of help with this. Unfortunately, unlike Rails (and probably Symfony itself), there is not a robust community of users and experts out there available to help answer your questions at this point. The only way to figure things out is in the documentation.

There is an extensive documentation set and some nice examples. Unfortunately, the documentation lags development, and sometimes in a significant way. I attempted to setup up the afGuard plugin, which is for handling authentication and security. Unfortunately, if you follow the docs (there are a couple of different processes described) you will not be successful. For instance, widgets and layouts they claim exist, do not. It turns out you don’t need to copy anything yourself either, you can reference them where they are by default, and this is the recommended behavior. Recommended by the developer, at any rate, who happened to answer this question. The documentation tells you to copy the files elsewhere. I’ve tried a couple of times to get security working, but in both instances the app just stopped working. Since I couldn’t get any response to my posting of problems on the site, I deleted the VM and started over again.

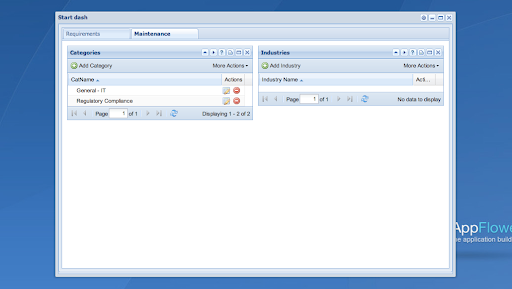

I’m on my third time now, and am waiting to try afGuard again until later. I will also make sure to backup the app and the data before I start, so I can restore the work I’ve done without having to wipe the slate and start over. The latest rendition of the app is moving along nicely. Let me tell you what has been easy, especially since I’ve been so negative. Dealing with my own tables and widgets, once you read a bit through the docs and look at a couple of examples to understand the approach, I can generate, very quickly, a nice tabbed interface with lists and forms to edit. The framework get’s one to many relations, and if you put in a related field to edit, it will by default assume you want to create a drop down list with the description field from the other table (which most of the time is precisely what you want to do.) Adding and updating records, and creating those buttons is trivial. I now know how to delete records, so that code will be transportable. I don’t have it working perfectly yet. Apparently there is a template I need to create that will popup and let you know how things went in the delete attempt. I don’t think that is documented anywhere so I will have to see what I can figure out. Here is one page of the app as it stands right this second.

So, I certainly didn’t get this up in minutes. If I had left security out, perhaps that would have been closer to the case. The question is, did I save time vs. using straight Symfony or Rails? With Rails, I can say categorically, no. This is because I know Rails and I can get a basic app, including authentication, up very quickly. If I had used straight Symfony, I would have had to install the framework, then get used to the way it generates files, and get up to speed on PHP, which I hadn’t looked at in quite a while. So, appFlower may have saved some time there. Once I know the framework well, future apps will likely move along much faster, and maybe even faster than rails.