Why?

Recently, I’ve deployed different Rails apps to three different Linux servers from scratch. All three were virtual servers. Two were Ubuntu 12.04 servers using Apache. The most recent is a Debian server (version 6) running Nginx. Each server took progressively less time to launch, but with each one, I found myself grabbing bits and pieces from Stackoverflow.com, and other destinations in order to successfully complete my effort. So, I’ve decided to take the notes I made on this most recent deploy and post them here so that perhaps others will be able to benefit from my trials.

The Host

For all of my basic websites and e-mail, as well as a couple of old Rails applications, I’ve used Dreamhost shared servers. For websites, this has worked out well, being a very cost effective means of managing websites. There has also been little downtime and few overall issues. However, with Rails, there is a major issue. While I understand the desire to avoid being on the bleeding edge with technology, Dreamhost has remained rather rooted in the distant past. I have long since ceased doing updates to my first application, the one I use to manage our parish bookstore, simply because I can’t move to any current gems or technologies.

However, Dreamhost has a relatively low cost Virtual Private Server option, where you get a virtual server running Debian. There are various levels of configuration for the server, from situations where Dreamhost manages most of the major configuration options (web server, users, etc.) to where you manage it all. In the few days there are only two negatives with Dreamhost, and neither of them represent, in my judgement, much of a problem. The first is that you have to use Debian – there is no other option. The only other company I have to compare this to is Rackspace, where you have a wide variety of Linux flavors (and Windows for an appropriately larger fee). With Rackspace, however, there is a bit of an increased cost that comes with the flexibility. The other issue is around the chat support. With Rackspace, I tend to have a very capable technical person in the chat app in under a minute. With Dreamhost it has been 10 -15 minutes, and I’ll admit the quality of the technician isn’t as high. Nothing dramatic, but there is a level of you get what you pay for.

While discussing hosts, I should mention my choice of code repository. I have opted to use bitbucket.org, an Atlassian product. Why? Well, free is the good part, but I also get to have the repository remain private. The only limitation I have is that I can’t move past 5 users. Well, since these are apps that I’m building myself, I doubt this will be an issue. You can, of course, use Github, but you have to pay to be private. I’m a huge Open Source fan, but I don’t necessarily want everything that I’m working on to simply be out there. At some point in the future, I may take some of these apps and make them publicly available, but I like being able to start with them privately.

Okay, Let’s Get Down to Work

So, I provisioned the Dreamhost VPS and since I get a week free, I opted to max out the memory available for the server. This proves to be beneficial as some of the software installation processes get very memory intensive. Installing the Pasenger-nginx module will complain if you have less than 1024 Mb of RAM, and if your server doesn’t have that much, it will reboot in the middle of the installation process. I opted to deselect every “Dreamhost Managed” option, perhaps even when I didn’t need to, but I figured it would be safest to be able to do my own installations. This included selecting no web server to begin with.

For most of the installation process, I followed the instructions at Digital Ocean. Yes, these instructions are for Ubuntu, but that is a Debian variant after all, so I didn’t run into any trouble. The only thing I did differently to begin with was to run aptitude update and aptitude dist-upgrade in order to ensure that everything I needed was available. I also opted to follow the RVM installation instructions from the RVM website for multiuser. I have, over time, found various sets of instructions on RVM installation, and have always found it best to simply go with the authors.

Everything else installed as indicated (I did opt for Ruby version 2 instead of 1.9.3).

Nginx

I opted for Nginx in all of this for a couple of reasons. The first is that I really didn’t need all of the capabilities of Apache to run just Rails applications. Down the road, I do expect to use Solr, but I believe that the installation will build its own version of Apache. Nginx is also supposed to keep a relatively small memory footprint, which is important as I’m paying for memory, and it is supposed to be faster. I haven’t run my application on it long enough to decide, but time will tell.

When you are done running the above instructions, it is likely that Nginx won’t work. 🙂 Surprise.

I believe that the problem was I had residual Dreamhost Nginx pieces on my server, most notably the nginx init script from the /etc/init.d directory. For those who are very adept at fixing Linux scripts, fixing the one that is present isn’t probably very difficult. For my part, though, I just grabbed the script present on this page about setting up Debian and Rails. The script is not entirely robust, as I find myself needing to manually kill the nginx processes if I need to restart them, but that isn’t much trouble and I’ll likely fix this later. Outside of making the script executable and ensuring that it runs at startup, I mostly ignored this page. A lot of it is because the default Debian install from Dreamhost has much of it taken care of. The other issue has to do with RVM. I’ve long since learned the advantage of using RVM, so manually installing Ruby seems like a bad idea. There are some other interesting looking parts on that page, so I suspect it is more useful in general than I took advantage of.

After making these changes, Nginx just… didn’t work. The problem was with the log files for Nginx which were all owned by root. Seems like a bad idea. I modified /opt/nginx/conf/nginx.conf to run as www-data then changed the log file ownership appropriately. This is user is very much unprivileged in the system, and so seems like a good choice to run nginx as (Apache defaults to this to, so it should seem familiar to people who have worked with Apache).

MySQL

MySql installation was amazingly painless. I’ve had problems with it before, but I followed the instructions from cyberciti.biz, and all was happy.

Deployment and Rails Tidbits

A lot of what I’m going to say here will likely result in a bunch of face palming by more talented developers than I, but since I’ve not done a lot of new deploys in the past, I still trip over amazingly trivial things, so I figure (hope) I’m not alone in this.

The first bit is to remember to generate keys on both your development machine and on the server and provide the public keys to bitbucket, so you can download the source code during the deploy process. BTW, I use Capistrano for deploying my rails apps, as I find it easier for doing updates. Frankly for an initial install, I don’t think it helps too much, but down the road you’ll be happy if you use it.

When you create the keys on your server, make sure you do not use a pass phrase. Although the server will ask for your passphrase during the deployment process, Capistrano doesn’t seem to actually transmit it, so your deploy will fail.

Also, don’t forget to run cap (stage) deploy:setup. I always forget to do that on first install, then watch it fail as the target directories don’t exist. Before you do the deploy, however, you should change the /var/www directory to be owned (chown www-data:www-data) and writable (chmod g+w) by the www-data group. I should have mentioned that my deployment server user is a member of www-data. This makes it easier to make changes during the installation process. Turns out that giving global www-data too many privileges is not wise. Plan on running the rails application under a dedicated service account and give that account permissions to the appropriate folders for running the application (typically you just need public, tmp and log directories and their subs, as well as any custom directories you need to write to). The installation can run using your user account.

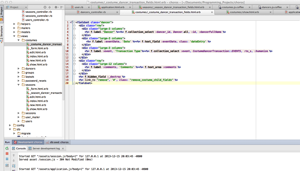

Two other issues I ran into had to do with bundler and with a javascript runtime. I ran deploy and received an error that there was no bundler. I performed a gem install bundler on the server, but that didn’t help. I then discovered that I was missing a require ‘rvm/capistrano’ at the top of my deploy file, which is necessary for doing capistrano deploys in an rvm environment.

The javascript runtime is best dealt with by installing node.js which you can do by following the instructions here. You can go get a cup of coffee and a doughnut while this installer is running. It takes a while.

Another problem was with using Zurb foundation. Since the foundation css file doesn’t actually exist, the application will not run when you access it from your web browser. So, it is necessary to run a bundle exec rake assets:precompile at the end of your installation. Apparently you will also need the compass gem in your gem file ahead of the foundation gem.

Finally, if you are running multiple stages on your server (I have a staging, um, stage, for testing new stuff out with users) you want to make sure the RAILS_ENV variable is properly set. You can follow the instructions at the mod rails site for doing this.